[ad_1]

This week, the scientific journal Frontiers in Cell and Developmental Biology published research of fake imagery created with MidJourney, one of them. Most Popular AI Image Generator,

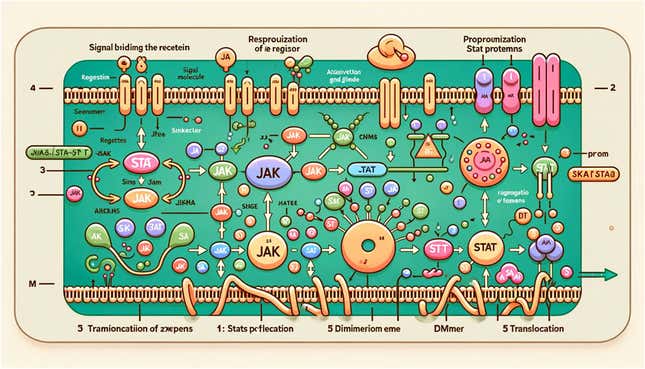

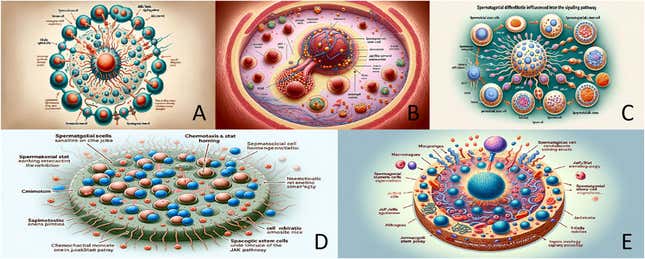

open access paper Explores the relationship between stem cells in the mammalian testis and the signaling pathways responsible for mediating inflammation and cancer in the cells. The written content of the paper does not appear to be fraudulent, but the most surprising aspects of it are not in the research itself. Rather, they are inaccurate and bizarre depictions of rat testicles, signaling pathways, and stem cells.

The AI-generated rat diagram shows a rat (helpfully and correctly labeled) whose upper body has been labeled as a “centogenic stem cell.” What appears to be a very large rat penis is labeled “Desilced,” with insets on the right side to highlight “Isolate cesargotomer cell,” “DK,” and “RetAT.” Hmm.

According to Frontiers Editor Guidelines, Manuscripts are subject to an “initial quality check” by the research integrity team and handling editor before the peer-review process. In other words, multiple people reportedly reviewed the work before the images were published.

To the researchers’ credit, they do say in the paper that the images in the article were produced by MidJourney. But Frontiers’ Site for policies and publishing ethics Note that corrections may be submitted if “there is an error in a figure that does not alter the conclusions” or “there are mislabeled figures”, among other factors. AI-generated imagery certainly falls under those categories. Dingjun Hao, a researcher at Xi’an Jiaotong University and co-author of the study, did not immediately respond to Gizmodo’s request for comment.

The image of a rat is simply wrong, even if you have never cut off a rat’s genitals. But other figures in the paper may appear credible to the untrained eye, at least at first glance. Yet anyone who has never opened a biology textbook will see on further examination that the labels on each diagram are not entirely English—a Clear indication of AI-generated text In imagination.

The article was edited by an expert in animal breeding at the National Dairy Research Institute in India, and reviewed by researchers at Northwestern Medicine and the National Institute of Animal Nutrition and Physiology. So how did these absurd pictures get published? Frontiers in Cell and Developmental Biology did not immediately respond to a request for comment.

OpenAI text generator efficient enough to get ChatGPT Research beyond the supposedly discriminating eyes of reviewers, A study conducted by researchers at Northwestern University and the University of Chicago found that human experts were deceived by ChatGPT-generated scientific abstracts 32% of the time.

So, just because the images are clearly bullshit masquerading as science, we shouldn’t ignore the AI engine’s ability to tell BS from real. Importantly, those study authors warned, AI-generated articles could lead to a scientific integrity crisis. The crisis seems to be going well.

Alexander Pearson, a data scientist at the University of Chicago and co-author of that study, said at the time that “Generative text technology has a huge potential to democratize science, for example by making it easier for non-English-speaking scientists to share Is.” their work with the wider community,” but “it is essential that we think carefully about best practices for use.”

AI’s popularity has increased Reason scientifically wrong hypothesis To make their way into scientific publications and news articles. AI images are easy to create and often visually compelling – but AI is equally cumbersome, and it is surprisingly difficult to convey all the nuances of scientific accuracy in a single diagram or illustration.

The recent paper is a far cry from the bogus papers of years past, a pantheon that includes hits likeWhat’s the deal with birds?” And this star trek-Themed work “Rapid genetic and developmental morphological changes following extreme severity,

Sometimes, the paper that passes peer-review is simply ridiculous. Other times, it’s a sign that “paper factory“They are brainstorming on so-called research which has no scientific merit. Springer Nature was in 2021 Forced to withdraw 44 papers In the Arabian Journal of Geosciences for being utter nonsense.

In this case, the research may have been sound, but the inclusion of MidJourney-generated images brings the entire study into question. The average reader may have difficulty considering the signaling pathways when they are still busy counting how many balls the rat should have.

More: Chatgpt writes well enough to fool scientific reviewers

[ad_2]